Capturing the Student Voice

If we genuinely want to make learning personalized, then we need to consider the student voice. Most systems today are excellent at capturing “hard data” – what did the student do, how long did the student spend doing it, what was the outcome? However, there is much more to the learning process than what is captured by these metrics. To get a richer and more complete picture of the student’s experience, we have to listen to them. How are they feeling? What are their opinions? What is their feedback on their learning? Getting answers to these types of questions can be particularly tricky in the online environment, where there is little or no face-to-face contact amoung the students or between the students and their instructors. We need to look at some way of capturing the student voice.

In this post, we will lay out an overview of the features we are working on to capture this data. In later posts, we will go into more detail on the research behind why we did things the way we did, what the data may tell us, and how the instructors and institution can make to most out of this data.

The Picture So Far

There are many aspects of the student voice and many ways in which we could approach capturing it. Discussion boards undoubtedly give one way of allowing students to express themselves, to communicate with each other and their instructors, and to ask and answer questions. Discussion boards are highly flexible, but this flexibility is itself a source of difficulty. The data is highly unstructured, and it is challenging to get a clear picture of what is happening, or what the students are saying, without some human intervention and interpretation.

The public nature of discussion boards can also be both an advantage and a disadvantage. You can reach a broad audience and have high levels of engagement but, on the flip side, students may feel reluctant to share their emotions or struggles in a public forum.

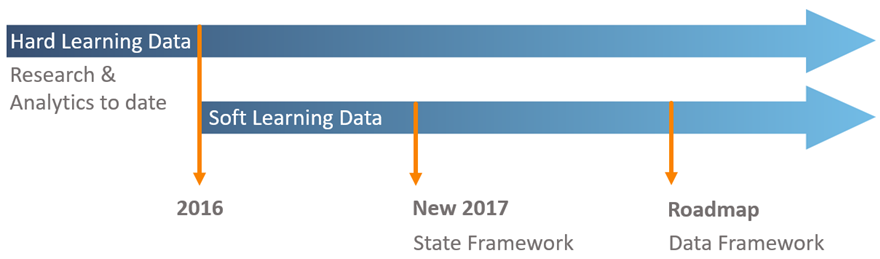

Prior to the September 2017 release of our Realizeit platform, we had worked with hard data; however the soft data that captures the more personal side of students has been something we’ve been thinking about for many years. In 2016 we started to put some of our ideas together and came up with two approaches that we are offering to institutions, instructors, and students to capture soft data.

The first approach is our State Framework, a lightweight feature to measure a single data point. The more extensive and comprehensive piece is our Data Framework, which is part of our roadmap for an upcoming release. It worth pointing out that both pieces are frameworks with a flexible structure that can be adapted to capture a range of possible data points and used in a variety of contexts. This also means that these are great research tools as we will be able to reuse them to capture alternative metrics in future research projects.

Not only is it important to consider what data you are capturing but also how you capture it. Both approaches we will use involve interrupting the learning process to some extent, so we have to be careful in how we design them. In the next section, I’ll give a bit more background on the State Framework.

State Framework

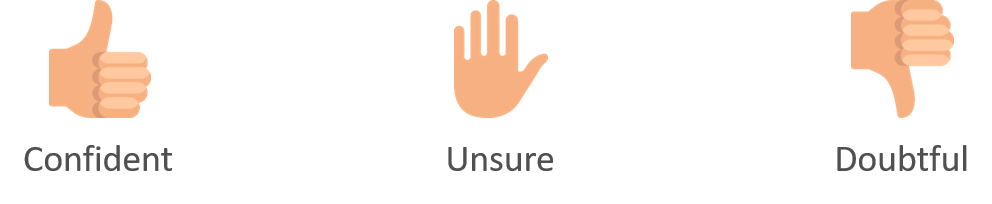

Our definition for the State Framework is a generalized feature to allow students to provide a single data input in real time on an ad-hoc basis. So, what does that mean? With our state framework, we wanted a simple means of capturing some metric that can change on a regular basis, in a short time period - like students emotions or their confidence level. We wanted a means for the students to be able to let us know how these things are changing in real-time – while we disrupt their learning as little as possible.

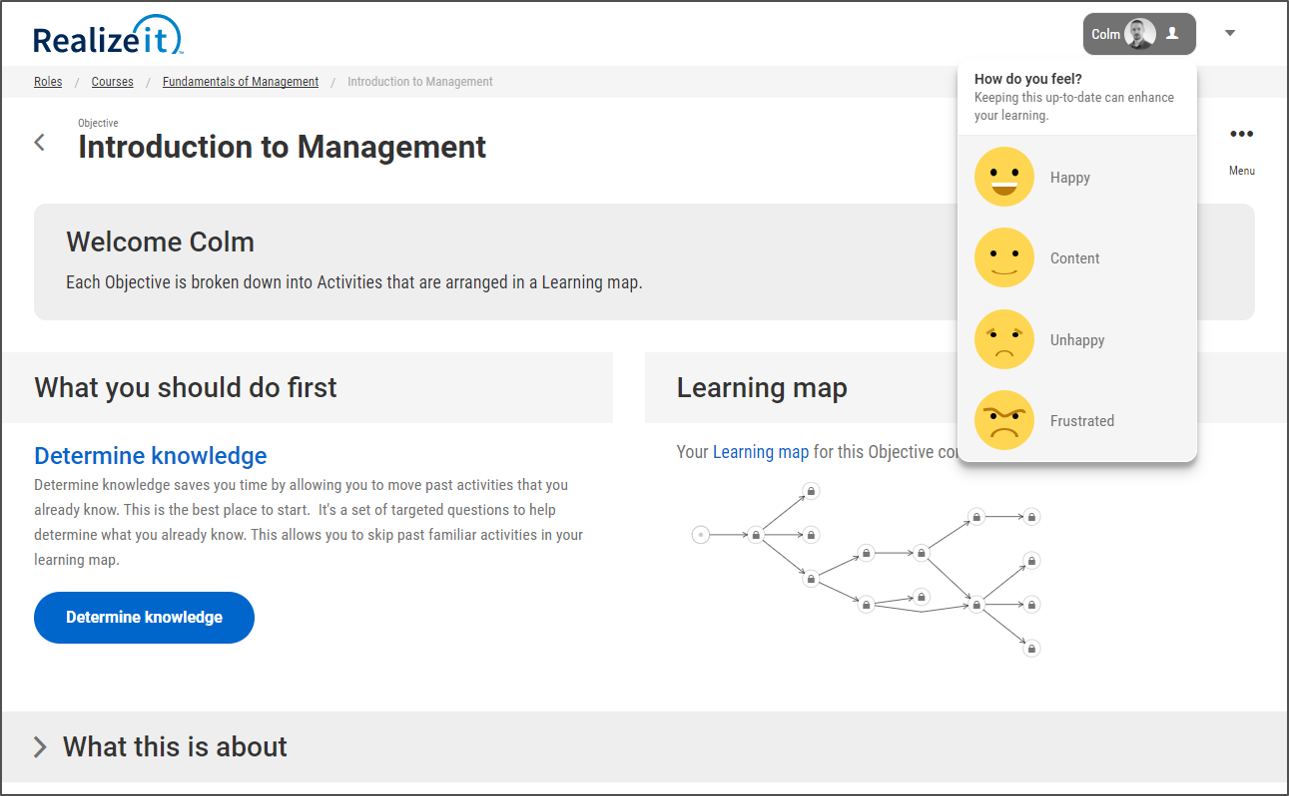

The State Framework displays a small popup to the students and allows them to quickly select one of several options to provide an answer to a question as quickly as possible. This feature is for use by the students any time they want; a prompt can be set to remind them at regular intervals to update it. This input is not tied to one area of the system and can be updated whenever students see fit, such as during a lesson. Because of the hard data we capture in the background, we know what the students were doing just before they updated, so we have context for why the students changed their State Framework input values.

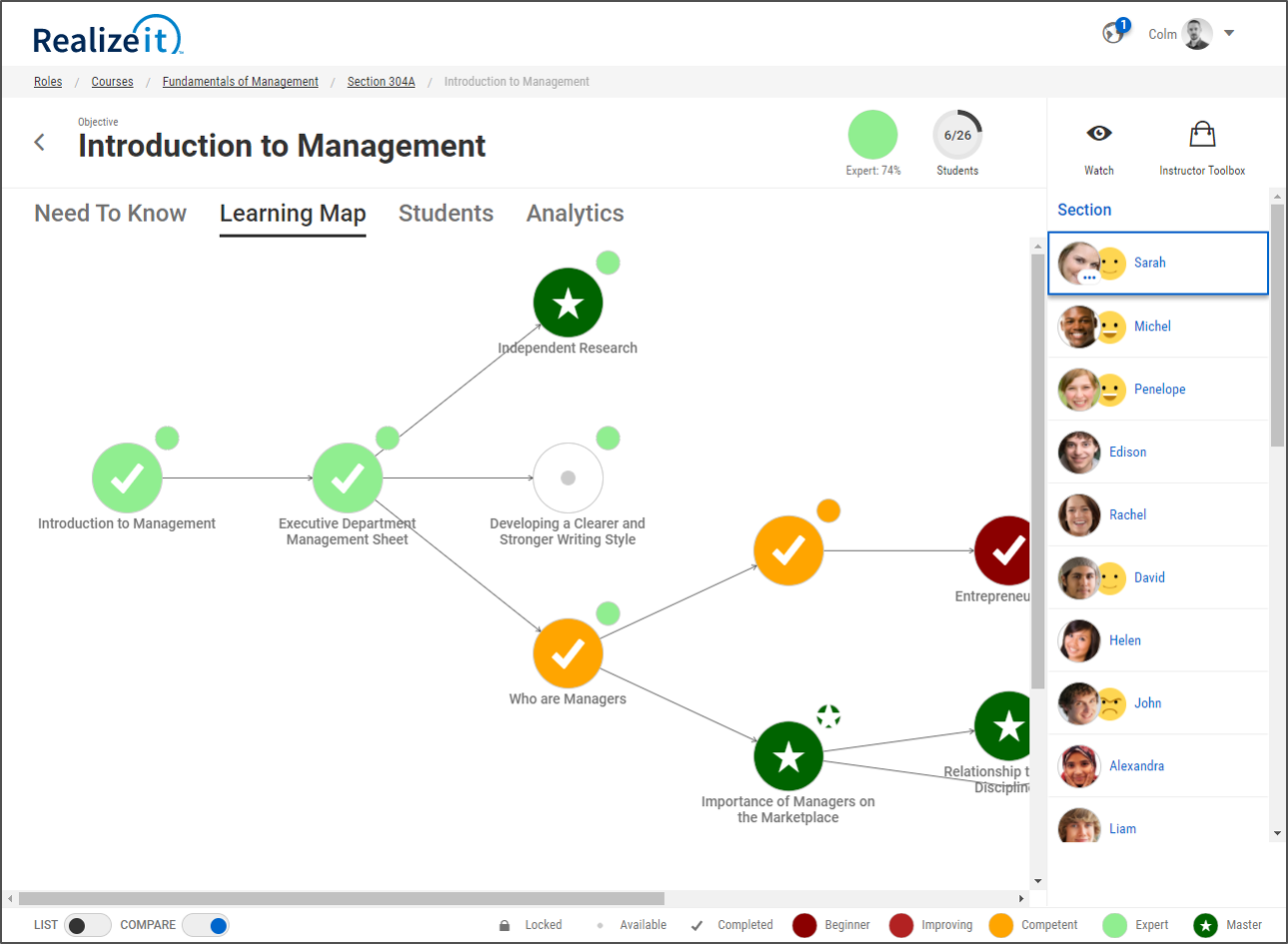

In the screenshot above, we are using the State Framework to capture student affective state or emotional state. This is our internal goal for the State Framework and is included in the system by default. In follow-up posts, we will discuss our motivation for this, the research behind the emotions we chose, and what we learn from the data.

Other uses for the State Framework could include measuring students’ confidence in their mastery of the learning they have completed.

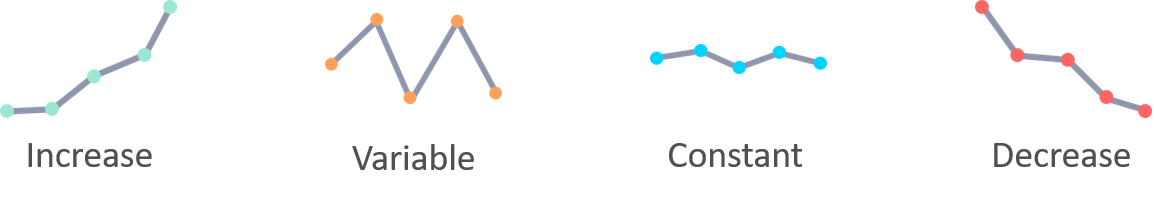

Alternatively, we could ask them to predict how their level of mastery will change over the remainder of the course.

The framework is fully customizable by the institution or the instructor to capture a new metric, or to make adjustments to our default framework for capturing student affective state.

We provide the students’ most up to date values to their instructors and mentors so that they get additional information on what is happening with the student in real time, beyond their engagement and attainment metrics.

Data Framework

While the State Framework provides a quick glimpse of a single metric, the Data Framework is designed to go beyond this and capture a more extensive range of possible data values. It is built to capture more detailed feedback from the students and can be customized to prompt for feedback based on specific triggers in the system. Given that it can capture a broader range of more detailed information, it is potentially more disruptive to the learning process. Possible uses include delivering surveys or asking for feedback on learning content. As mentioned this feature is part of our roadmap for a future release, and we’ll be sure to revisit this here with more detail once released.

Wrap up

We hope that gives you a good idea of our progress on the tools we will use in capturing the student voice, the soft data isn’t traditionally measured in systems, and can only be obtained by listening to the student. This is a work in progress and will continue to evolve as we learn. From the research point of view, the most exciting parts come next: what data can we capture using these tools, what can we learn about the students, how do we make effective use of the data, and how can we use it to impact on learning positively!

If you are interested in using one of the frameworks as part of a research project or are interested in working with our partner institutions and us on understanding the data we gather then please get in touch.